Embedded Rust

Asynchronous Programming

Introduction

The Rust programming language has been increasing in popularity in the last few years, and has seen some uptake in Embedded software. Rust introduces a number of features which are very pertinent to Embedded programming, including improvements to the way memory safety is handled. The asynchronous programming framework Rust provides is also very interesting from an Embedded standpoint.

Asynchronous processes are an inherent part of Embedded programming, due to the amount of interaction there is with hardware. In a microcontroller application, often it will offload as much as it can on hardware modules, allowing the processor to sleep and only wake up on an event that requires attention. Asynchronous programming provides a framework for writing these kind of event driven programs in a neat way.

This article will introduce asynchronous programming in Rust, and explore a bit how this is realized in a popular asynchronous executor for Embedded systems – Embassy Project executor.

What is asynchronous programming?

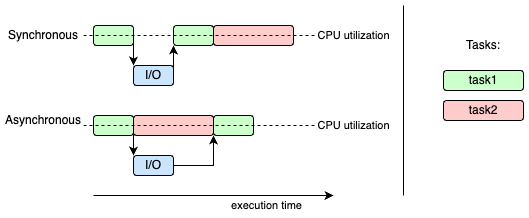

Asynchronous programming is a style of concurrent programming where the execution of a task can be postponed/suspended, while the processor is waiting for some event to continue its execution. This allows other tasks to be executed in the mean-time, increasing the utilization efficiency of the CPU.

In microcontroller environments, it is common place to have to wait for an external event or task to complete before proceeding. One example could be waiting for an I/O (input/output) transaction to complete, such as an SPI transaction. In a synchronous system, the task would block, waiting for the event to occur before it can proceed, without making progress on any other tasks.

Rust Async Framework

The Rust asynchronous framework revolves around the use of

futures, which in a nutshell are work/computations that will be completed sometime in the future.

Rust futures must implement the

Future

trait, which is described more in detail later on.

Utilizing the

Future

trait, Rust uses two main keywords to enable asynchronous programming:

await

await

is called on a future, and indicates that the current task’s execution will be suspended until the future’s future work is completed.

Futures in Rust won’t do anything unless you call

await

on them.

For example, you might have a future related to SPI which reads some data from a chip attached to the SPI bus. Calling

await

on this future indicates to the caller that we have reached a bit of code that contains something that will block for some time waiting for some I/O.

Therefore, we give control back to the caller, which can decide how it wants to handle this situation. One possibility is that the caller just

continuously polls to see if the future is finished, or maybe it can handle other futures in different tasks while waiting.

async

The

async

keyword is used to mark a function as asynchronous. Basically this indicates that the function contains some future or futures that will have to be

awaited.

Marking the function as

async

actually makes the function a future itself.

async fn timer_function() {

defmt::info!("Starting timer");

Timer::after_secs(2).await;

defmt::info!("Timer elapsed");

}

async fn other_function() {

timer_function().await; // If await was not here, timer_function would not be processed

}

Here we see an example using

async

functions.

other_function

is an

async

function, because it contains an

awaited

future (the

async

marked

timer_function).

timer_function

is also

async,

because it contains the

awaited

future

Timer::after_secs().

It is possible to have an

async fn

which does not contain a future. Since using the

async

keyword is a way of turning a function into a future, it is also valid. However, when the function is

awaited,

it will run to completion like a normal function.

Futures

As described above, futures are work/computations that will be completed sometime in the future.

All futures implement the

Future

trait:

pub trait Future {

type Output;

fn poll(self: Pin<&mut Self>, cx: &mut Context<'_>) -> Poll<Self::Output>;

}

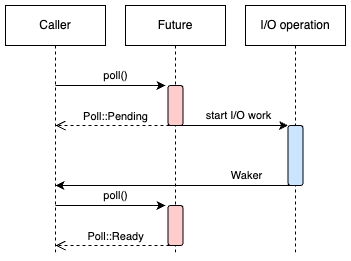

Given above is what is included in the

Future

trait. As shown, all futures need to implement the

poll

function, which is used to check the future to see if execution can continue or is still waiting for some work/computation to complete before continuing.

The

poll

function either returns the

Poll::Pending

enum, indicating that the future is not ready to be continued, or the

Poll::Ready

enum, which indicates that the work/computation that the future was waiting for is finished, and that execution can be advanced.

As mentioned before, it is up to the caller of the

poll

function to decide what to do with the information. It could continuously poll the future until the

Poll::Ready

is returned. This could be very inefficient, if we for example consider a future waiting for a user to push a button, which could take a long time.

The caller could also do other things while waiting for the future to be ready, or it can go to sleep. This is what the

Context

type,

cx,

is useful for.

The

Context

provides access to the caller’s

Waker.

The

Waker

is basically a mechanism that can indicate to the caller of the future that the future is in the

Poll::Ready

state, i.e., the future’s work is completed. The

Waker

can be implemented by the caller in a number of ways, which is why it is passed into the poll function. It can be thought of a bit like a callback

mechanism, so that when the future is done its work, it will call whatever the caller has assigned as a

Waker.

We will see later in an example how the

Waker

is implemented.

Executors

An executor is basically something that runs and manages asynchronous tasks. It is synonymous with a scheduler in a multi-threaded environment. As described before, the executor would be the “caller“ which is polling the futures.

Executors run on cooperative scheduling. This means they will run a task until it is finished, or until it yields control back to the executor (through an

await).

There are no priority of tasks.

#[embassy_executor::main]

async fn main(spawner: embassy_executor::Spawner) {

spawner.spawn(green_task()).unwrap();

spawner.spawn(red_task()).unwrap();

// In this implementation the executor polls the red_task first, but this is not always the case

}

#[embassy_executor::task]

async fn red_task() {

loop {

embassy_futures::yield_now().await; // Future which does nothing but yield control back to

// the executor

}

}

#[embassy_executor::task]

async fn green_task() {

embassy_futures::yield_now().await;

loop{}

}

As we can see in this example, the green task will end up taking all the executor’s time once it reaches the loop{} on line 18. This is because the task cannot return, and has no await it can call to hand back control to the executor. An executor’s job is basically to poll each task in its task pool to see if any progress can be made on the task. If the task returns Poll::Ready, the executor will continue execution of that task until it reaches another await, or the task completes and returns. If all the tasks in the pool return Poll::Pending when polled, the executor can stop polling the tasks, and wait for its waker to be triggered by the completion of one of the task’s futures. Once woken, it can poll the tasks again.

Rust doesn’t provide its own executor, so there are a number of executors to choose from, or you could write your own. In the Embedded world, the Embassy Project executor is one of the most popular ones.

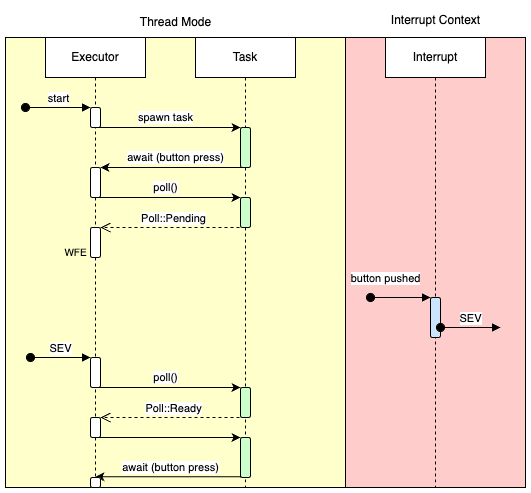

How does embassy implement the executor for cortex-M?

The Embassy Project provides an executor which runs in the normal Arm thread mode. The waker used for this executor is a combination of the

WFE

(wait for event) and SEV (set event) instructions. When calling the

WFE

instruction, the processor will go into some kind of sleep mode, where it conserves power. It can be woken by the

SEV

instruction, which sets an event in the local event register. This means the processor (executor) can go to sleep when all the polled futures return the

Poll::Pending

result, and wait to be woken by the

SEV.

The

SEV

is asserted in an interrupt handler, which is connected to the completion of the work we are waiting for in the future.

As an example, consider a future where we want to wait for a user button press. What we can do in the future, is setup an interrupt on the GPIO to trigger when the user button press is detected. We then implement the interrupt service routine. It should clear the interrupt, and run the waker of the executor, which in this case is the

SEV

instruction.

Once this is setup, the future is in the

Poll::Pending

state, and the executor can go to sleep (

WFE)

or process some other tasks. When the interrupt is triggered, the executor will either be sleeping (in which case it will wake up), or already processing another task, in which case it is already awake and will poll the GPIO user button future anyway when it next gets the chance.

Examples with Embassy

Waiting for a button press

#[embassy_executor::main]

async fn main(spawner: embassy_executor::Spawner) {

let peripherals = embassy_stm32::init(embassy_stm32::Config::default());

let button = embassy_stm32::gpio::Input::new(peripherals.PA4, embassy_stm32::gpio::Pull::Down);

let button_input = embassy_stm32::exti::ExtiInput::new(button, peripherals.EXTI4);

spawner.spawn(button_task(button_input)).unwrap();

}

#[embassy_executor::task]

async fn button_task(mut button_input: embassy_stm32::exti::ExtiInput<'static, PA4>) {

loop{

defmt::info!("Waiting for button push");

button_input.wait_for_rising_edge().await;

defmt::info!("Button pushed");

}

}

Here we can see the code for the example we described above, where we are waiting for a user button press. In this case, the executor only has one task.

Several things happen when we call

button_input.wait_for_rising_edge().await;.

One thing is that the interrupt triggered on a rising edge detection (using EXTI) for

PA4

is enabled. Another, is the implementation for the interrupt service routine is registered to the interrupt handler associated with the EXTI for

PA4.

The interrupt service routine should mask the bit triggering the interrupt (on EXTI), and the

Waker

the executor registered with the future will be called. The executor’s waker implementation, which in this case is

SEV,

is passed by the

cx

parameter as we saw in the

Future

trait implementation.

Response time to GPIO triggered EXTI

One thing that is interesting to see if the difference in response time between using the embassy executor waiting for a button press, and just a regular interrupt which toggles a GPIO when an interrupt is detected.

Response time between button press and GPIO toggle – embassy executor

Response time between button press and GPIO toggle – regular interrupt

When measured on the STM32F0 discovery board, the embassy version used 198us between the detection of the button push and the toggling of the LED (resuming the task). For the pure interrupt method, this was only 24us.

While there is a relatively big difference, there is obviously a trade off you have to consider when implementing an application. Asynchronous programming frameworks’ strengths are that for programs with multiple tasks, code can be much more easily written, organized and understood. 198us is still a pretty good response time, and if you really need faster response, you could mix the asynchronous framework and regular interrupt driven code.

#[embassy_executor::task]

async fn button_task(

mut button_input: embassy_stm32::exti::ExtiInput<'static, PA4>,

mut indicator_gpio: embassy_stm32::gpio::Output<'static, PC4>,

) {

loop{

defmt::info!("Waiting for button push");

button_input.wait_for_rising_edge().await;

indicator_gpio.toggle();

}

}

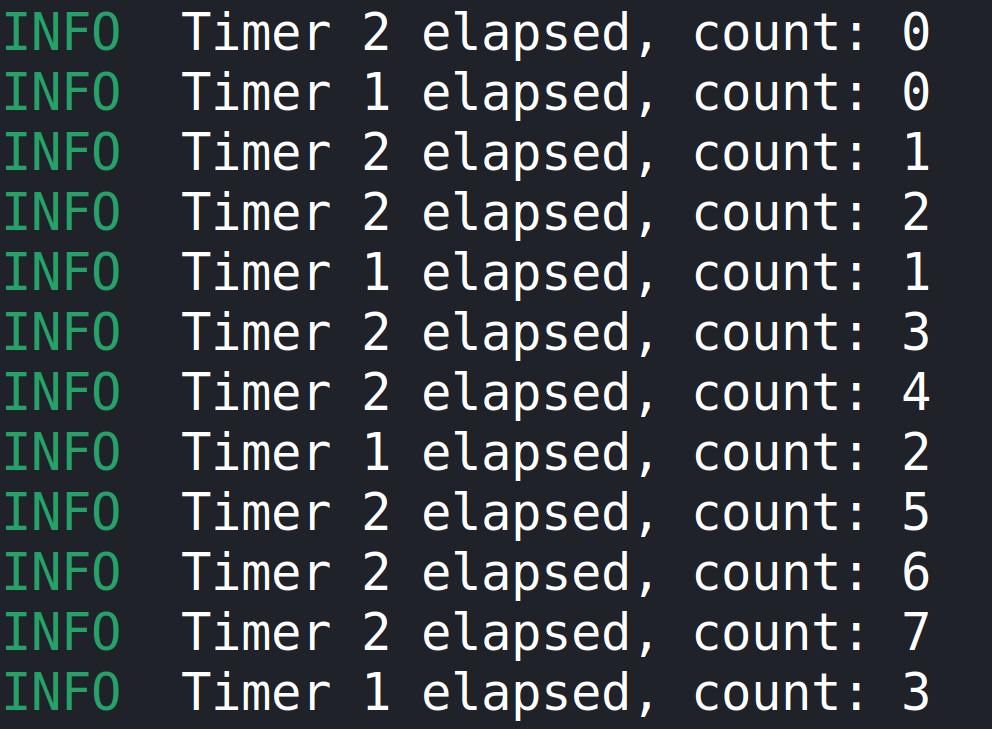

Two timer tasks:

#[embassy_executor::task]

async fn timer_one_task() {

let mut count: u32 = 0;

loop{

defmt::info!("Timer 1 elapsed, count: {}", count);

embassy_time::Timer::after_secs(5).await;

count += 1;

}

}

#[embassy_executor::task]

async fn timer_two_task() {

let mut count: u32 = 0;

loop{

defmt::info!("Timer 2 elapsed, count: {}", count);

embassy_time::Timer::after_secs(2).await;

count += 1;

}

}

Here we see an example using two tasks, which is a more likely scenario if you are using asynchronous programming. As we see, one task waits for 2 seconds before continuing, and the other 5 seconds before continuing. Neither timer blocks execution, which is why we see the timer elapsed messages interleaved with each other.

A note on the Embassy Interrupt Executor

Since asynchronous Rust uses cooperative scheduling, as opposed to preemptive scheduling, there is no concept of priority between tasks. This means a task which is of lower importance will continue running even if a task with higher importance is ready to continue

(Poll::Ready).

Embassy provides a solution by using the inherent priorities assigned to interrupts. By running an executor in an interrupt context, tasks in that executor will have priority over tasks running in the thread-mode executor. For the thread mode executor, the waker on cortex-M controllers used the SEV and WFE instructions as described above. For the interrupt context executor, to wake the executor, one simple needs to pend the interrupt assigned to the interrupt executor. The interrupt used for the executor can be chosen by the user, but should be one that will not be used by the program. For example, you can use the TIMER1 interrupt handler to run your executor in, but then you will not be able to use the interrupt features of the TIMER1 peripheral.

What’s more, if you have multiple tasks/task pools which need different priority levels, you can run multiple interrupt executors, or a combination of interrupt and thread mode executors. One thing to note though is by running multiple executors, the time spent context switching between the different executors can have a detrimental effect on the real-time performance of your system.

Conclusion

In this article we have introduced asynchronous programming in Rust, and explored a bit how it is implemented in an actual executor.

The asynchronous framework provided by Rust simplifies the development of event-driven applications on microcontrollers, making it easier to develop applications with multiple concurrent tasks.

Embedded Rust is seeing a relatively slower uptake in the Embedded sphere, but features like asynchronous Rust maybe help it be chosen over traditional languages for development on microcontrollers.

Mikkel Caschetto-Böttcher

Embedded Software Developer